AI MacMillan: Notes from the Webinar

The workshop started with a poll of approximately 100-120 participants from all over the US and Canada: “How confident are you to prepare students for a workplace with generative AI?” About 51% of people responded that they are not confident (to a greater or lesser degree), about 25% were “neutral,” and rest were “somewhat confident.”

Lisa Blue, Instructional Technologist, Eastern Kentucky University

Blue spoke about the emergence of Ai in the workplace and the concern of an over-reliance on generative AI (genAI). Based on 2023 Harvard Business School Technology & Operations Mgt.Unit Working Paper No 24.013 about AI’s effects on worker productivity and quality, workers who relied on genAI for content provided 60% less accurate work compared to the control group of workers, who did not use genAI. This was connected to the idea of “the jagged frontier” where genAI plus worker knowledge about how to use AI effectively was needed for accuracy. Since this was a 60-minute webinar, I was not 100% clear on all the concepts presented, but I’ve linked the working paper above for further explanation. Another key element of Blue’s presentation was that AI can be a “skill-leveler” to help students who are sometimes disadvantaged (neurodivergent or ELL students, for example), but it also reveals stereotypes and biases (ex. when asked to create an emoji of a chemist, genAI created older white male with glasses and beard).

Blue offered:

- Tasks for instructor usage

- personal asst. for mundane tasks

- suggestions for revising student learning outcomes, course descriptions, assessments and exercises

- Course redesign (some LMS already incorporating GenAI)

- Tasks for student learning

- Idea generation

- writing feedback

- adaptive tutoring

- generating examples

Suggestions for staying up-to-date:

- AI Educator (FB group, YouTube)

- Computer Science Archives ARxaiv

- AIValley, which is also on Linkedin, X (blog with a few contributors)

- Bot Eat Brain (Sound terrifying but I plan to take a look)

Vaugh Scribern, Assoc. Professor at U of Central Arkansas

Scribern is a history professor who has been rethinking assignments and embraced AI, because he believes all educators need to help students navigate AI. For now, he believes that AI is clumsy enough to not recognize the important examples when citing research, but it is unlikely that we will not be able to “catch” students using AI in the near future due to AI’s capacity to learn.

Scribern offered a “negative turned positive” encounter with a student. The student turned in a paper that seemed to be AI-generated. Scribern asked to discuss it with the student, and the student admitted that they used AI. Scribern could not have proven that they used it but was glad the student admitted to it. The two reviewed the paper together. Scribern recommended to use AI as a “research assistant” to offer feedback and encouraged the student to use the genAI paper as a draft, guiding the student toward significant revision.

Another example offered by Scribern was when a student used an AI bot to come up with a topic that was within the scope of the project guidelines. The professor, student, and bot worked together to brainstorm.

Tim Klein How to Navigate Life (Newsletter and Book)

Klein is a licensed clinical social worker who has co-authored a book (How to Navigate Life). Klein noted that technology has always been transforming the workplace and often focuses on removing repetitive tasks from humans to machines. An example given was less than 1% of the American workforce is involved in farming now vs. 100 years ago because of machines. The skill-biased technological change used to affect only blue-collar jobs but is about to turn to white-collar jobs due to genAI. Students need to be taught adaptability to their reach goals. Klein recommended using AI to “plant the seeds” but to grow the tree, people need to make meaning and use reason. As educators, we can focus on the learning to use AI to help our students become adaptable.

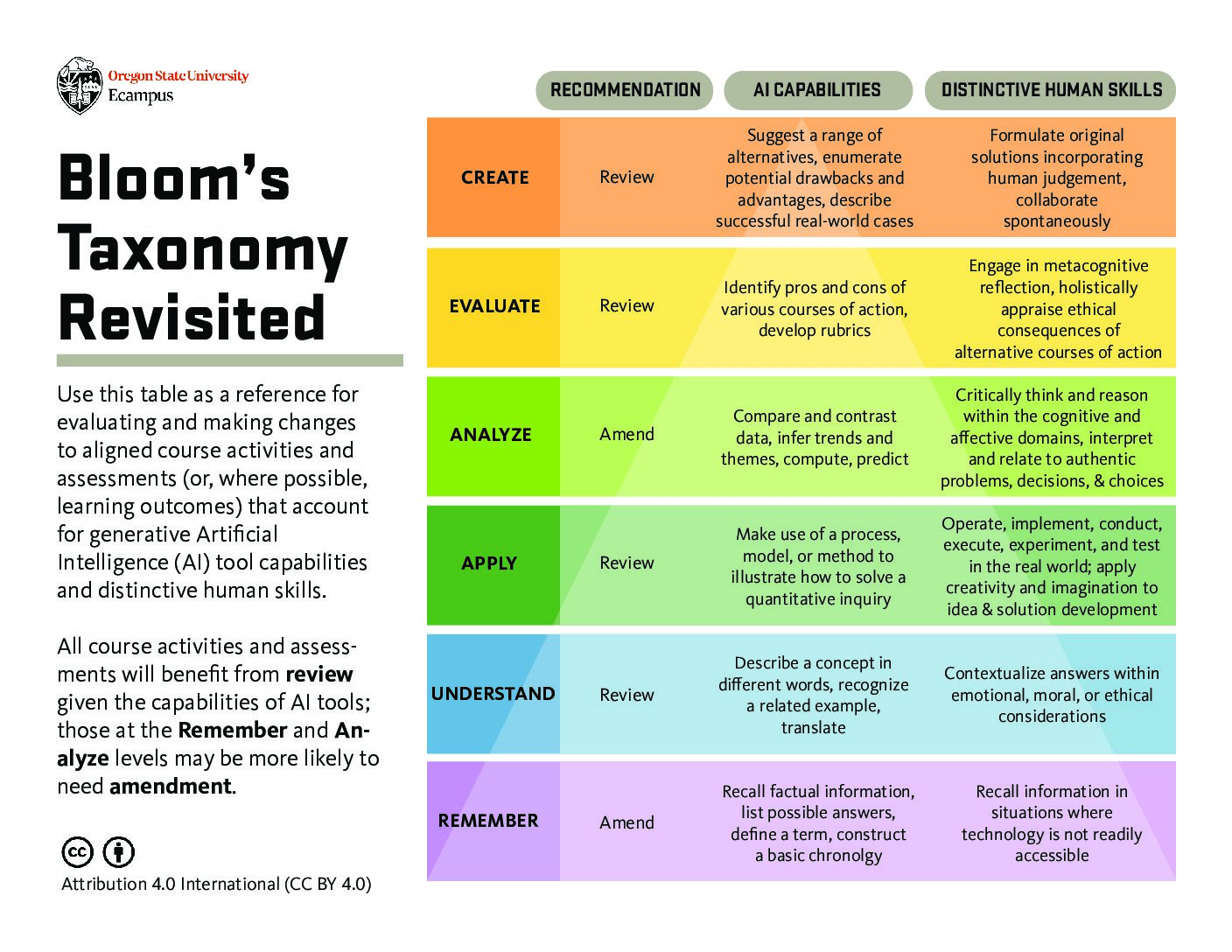

The most interesting part of this webinar to me was the idea of uniquely universal human skills: no matter the content, the skills that are least likely to be automated are skills that require an understanding of self and others. Klein categorized them in these groups and offered the revised Bloom’s taxonomy, which I find fascinating:

- Creative/cognitive/innovation/adaptation

- Interpersonal: collaboration, persuasion, communication

- Intrapersonal: self-management, executive function skills

Interesting Participant Thoughts

Katie McNamara (participant) offered this observation in the chat: “The craft of the ask is the best tactic to prevent AI generated work.” I agree and would add that process work and creative project work should be our direction. Making meaning comes from experiences and reflection as well.

McNamara also noted that AI detectors skew against multilingual and neurodivergent learners as do the algorithms.

Other participant ideas/observations:

- One participant has students generate and submit a ChatGPT response along with their own paper.

- Another participant observed that AI tends to use parentheses and colons often.

- One thought that students who compose with genAI end up with repetitive paper.

- One suggested using AI as a peer review partner.

- Recommended book: Beating ChatGPT with ChatGPT (which seems to me like a temporary fix at best)

Overall, I’d say I took what is captured in the meme below, which could list many white collar jobs as well.